Focus AI, the latest AI news in 3 minutes

Edition #102 - November 4th 2022

Feel free to subscribe or to share it.

This week:

❤️ A simple AI eye test could accurately predict a future fatal heart attack

🧼 AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

💬 Amazon releases a Q&A dataset called Mintaka

🎈 Measuring perception in AI models

💻 Want to train a big code model AND not annoy developers? 'BigCode’ might be the dataset for you

🖥 Meta announced its next-generation AI platform, Grand Teton

And about code:

[Book 📙] Aurélien Géron - 3rd Edition of Hands-on Machine Learning With Scikit-learn, Keras, and Tensorflow

[Post 🏬] Feature Store comparison: 4 Feature Stores - explained and compared

[Tuto 🎡] How to set up ML Monitoring with Evidently. A tutorial from CS 329S: Machine Learning Systems Design

== News ==

❤️ A simple AI eye test could accurately predict a future fatal heart attack

Heart disease is the leading cause of death globally with an estimated 17.9 million people dying from it each year, according to the World Health Organization (WHO).

The WHO says that early detection of heart disease - which often leads to heart attacks - could give patients precious time for treatment, which could save many lives.

Now researchers have found a simple eye test could be used to diagnose heart disease with the help of artificial intelligence (AI) and machine learning.

Previous research had investigated how the retina’s network of veins and arteries could provide early indications of heart disease.

That research looked at how the width of these blood vessels could be used to predict heart disease, but it wasn’t clear if the findings applied equally to men and women.

🧼 AI Data Laundering: How Academic and Nonprofit Researchers Shield Tech Companies from Accountability

In addition to the Shutterstock clips, Meta also used 10 million video clips from this 100M video dataset from Microsoft Research Asia. It’s not mentioned on their GitHub, but if you dig into the paper, you learn that every clip came from over 3 million YouTube videos.

So, in addition to a massive chunk of Shutterstock’s video collection, Meta is also using millions of YouTube videos collected by Microsoft to make its text-to-video

The academic researchers who compiled the Shutterstock dataset acknowledged the copyright implications in their paper, writing, “The use of data collected for this study is authorised via the Intellectual Property Office’s Exceptions to Copyright for Non-Commercial Research and Private Study.”

But then Meta is using those academic non-commercial datasets to train a model, presumably for future commercial use in their products. Weird, right?

💬 Amazon releases a Q&A dataset called Mintaka

Researchers with Amazon have released Mintaka, a dataset of 20,000 question-answer pairs written in English, annotated with Wikidata entities, and translated into Arabic, French, German, Hindi, Italian, Japanese, Portuguese, and Spanish. The total dataset consists of 180,000 samples, when you include the translated versions. Existing models get 38% on the dataset when testing in English and 31% multilingually.

Different types of questions and different types of complexity: Mintaka questions are spread across eight categories (movies, music, sports, books, geography, politics, video games, and history).

The questions have nine types of complexity. These complexity types consist of questions relating to counting something, comparing something, figuring out who was best and worst at something, working out the ordering of something, multi-hop questions that require two or more steps, intersectional questions where the answer must fulfill multiple conditions, questions involving negatives, yes/no questions, and worker-defined 'generic' questions.

🎈 Measuring perception in AI models

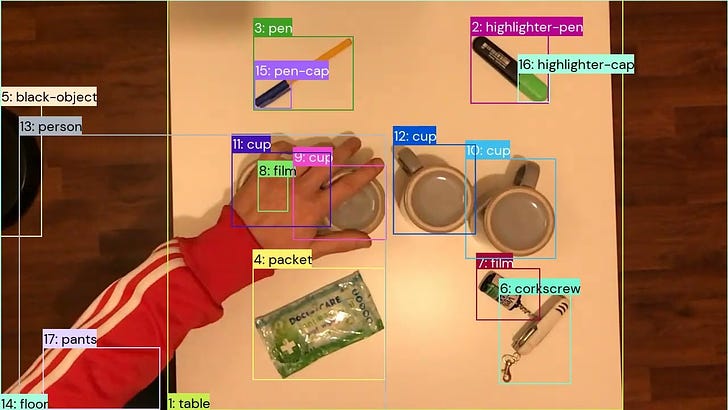

DeepMind has built and released the Perception Test, a new standardized benchmark (and associated dataset of ~11k videos) for evaluating how well multimodal systems perceive the world.

The test is "a benchmark formed of purposefully designed, filmed, and annotated real-world videos that aims to more comprehensively assess the capabilities of multimodal perception models across different perception skills, types of reasoning, and modalities," DeepMind says: Six tasks, one benchmark: The 'Perception Test' is made up of a dataset of ~11.6k videos that cover six fundamental tasks.

💻 Want to train a big code model AND not annoy developers? 'BigCode’ might be the dataset for you

…3.1TB of programming data across 30 languages, filtered for permissive licensing…

Researchers with HuggingFace and ServiceNow Research, have released 'BigCode, a 3.1TB dataset of permissively licensed source code in 30 programming languages. The idea here is to give back more control to code developers about whether their stuff gets used in language models. To do that, The Stack selected code "whose original license was compatible with training an LLM", and The Stack is also "giving developers the ability to have their code removed from the dataset upon request".

One potential issue with current code models is that they don't tend to have a sense of the underlying license information of the code they emit, so they can sometimes emit code that is identical to licensed code, putting developers and deployers in an awkward position. "By releasing an open large-scale code dataset we hope to make training of code LLMs more reproducible," the authors write.

🖥 Meta announced its next-generation AI platform, Grand Teton.

Next-generation AI platform uses NVIDIA H100 GPUs to tackle major AI challenges.

Compared to the company’s previous generation Zion EX platform, the Grand Teton system packs in more memory, network bandwidth and compute capacity, said Alexis Bjorlin, vice president of Meta Infrastructure Hardware, at the 2022 OCP Global Summit, an Open Compute Project conference.

AI models are used extensively across Facebook for services such as news feed, content recommendations and hate-speech identification, among many other applications.

“We’re excited to showcase this newest family member here at the summit,” Bjorlin said in prepared remarks for the conference, adding her thanks to NVIDIA for its deep collaboration on Grand Teton’s design and continued support of OCP.

== Code & Tools ==

Let’s dive in into more technical news.

[Book 📙] Aurélien Géron - 3rd Edition of Hands-on Machine Learning With Scikit-learn, Keras, and Tensorflow

With this updated third edition, author Aurelien Geron explores a range of techniques, starting with simple linear regression and progressing to deep neural networks. Numerous code examples and exercises throughout the book help you apply what you've learned. Programming experience is all you need to get started.

[Post 🏬] Feature Store comparison: 4 Feature Stores - explained and compared

In this blog post, we will simply and clearly demonstrate the difference between 4 popular feature stores: Vertex AI Feature Store, FEAST, AWS SageMaker Feature Store, and Databricks Feature Store. Their functions, capabilities and specifics will be compared on one refcart. Which feature store should you choose for your specific project needs? This comparison will make this decision much easier.

[Tuto 🎡] How to set up ML Monitoring with Evidently. A tutorial from CS 329S: Machine Learning Systems Design

This entry-level tutorial introduces you to the basics of ML monitoring. It requires some knowledge of Python and experience in training ML models.

During this tutorial, you will learn:

Which factors to consider when setting up ML monitoring

How to generate ML model performance dashboards with Evidently

How to investigate the reasons for the model quality drop

How to customize the ML monitoring dashboard to your needs

How to automate ML performance checks with MLflow or Airflow

By the end of this tutorial, you will know how to set up ML model monitoring using Evidently for a single ML model that you use in batch mode.

#Amazon #HeartAttack #Meta #NVidia

A big thanks to our sources: https://jack-clark.net/, https://www.actuia.com/, https://thevariable.com/news/, https://techcrunch.com/, https://read.deeplearning.ai/the-batch/

What about you? Have you noticed something else?

Don’t miss the next news!

Have a good week-end,

Maxime 🙃 from Toulouse, France with 🌺