FocusAI - Edition #96

Focus AI The latest AI news in just 3 minutes.

Edition #96 - April 22nd, 2022

If you like FocusAI, share the word:

This week, three articles :

☠️ AI Designs Chemical Weapons

😛 When language models can be smaller and better!

🐅 Animal Animations From Video

☠️ AI Designs Chemical Weapons

It’s surprisingly easy to turn a well-intended machine learning model to the dark side.

In an experiment, Fabio Urbina and colleagues at Collaborations Pharmaceuticals, who had built a drug-discovery model to design useful compounds and avoid toxic ones, retrained it to generate poisons. In six hours, the model generated 40,000 toxins, some of them actual chemical warfare agents that weren’t in the initial dataset.

The authors didn’t detail the architecture, dataset, and method to avoid encouraging bad actors. The following description is drawn from the few particulars they did reveal along with accounts of the company’s existing generative model, MegaSyn.

Designing effective safeguards for machine learning research and implementation is a very difficult problem. What is clear is that we in the AI community need to recognize the destructive potential of our work and move with haste and deliberation toward a framework that can minimize it

😛 When language models can be smaller and better!

DeepMind says we can make better language models if we use more data.

Deep Mind finds that language models like GPT-3 can see dramatically improved performance if trained on way more data than is typical. Concretely, they find that by training a model called Chinchilla on 1.4 trillion tokens of data, they can dramatically beat the performance of larger models (e.g, Gopher) which have been trained on smaller datasets (e.g, 300 billion tokens). Another nice bonus is models trained in this way are cheaper to fine-tune on other datasets and sample from, due to their small size.

To test out their ideas, the team train a language model, named Chinchilla, using the same compute used in DM's 'Gopher' model. But Chinchilla consists of 70B parameters (versus Gopher's 280bn), and uses 4X more data. In tests, Chinchilla outperforms Gopher, GPT-3, Jurassic-1, and Megatron-Turing NLG "on a large range of downstream evaluation tasks".

This is an important insight - it will change how most developers of large-scale models approach training. "Though there has been significant recent work allowing larger and larger models to be trained, our analysis suggests an increased focus on dataset scaling is needed," the researchers write. "Speculatively, we expect that scaling to larger and larger datasets is only beneficial when the data is high-quality. This calls for responsibly collecting larger datasets with a high focus on dataset quality."

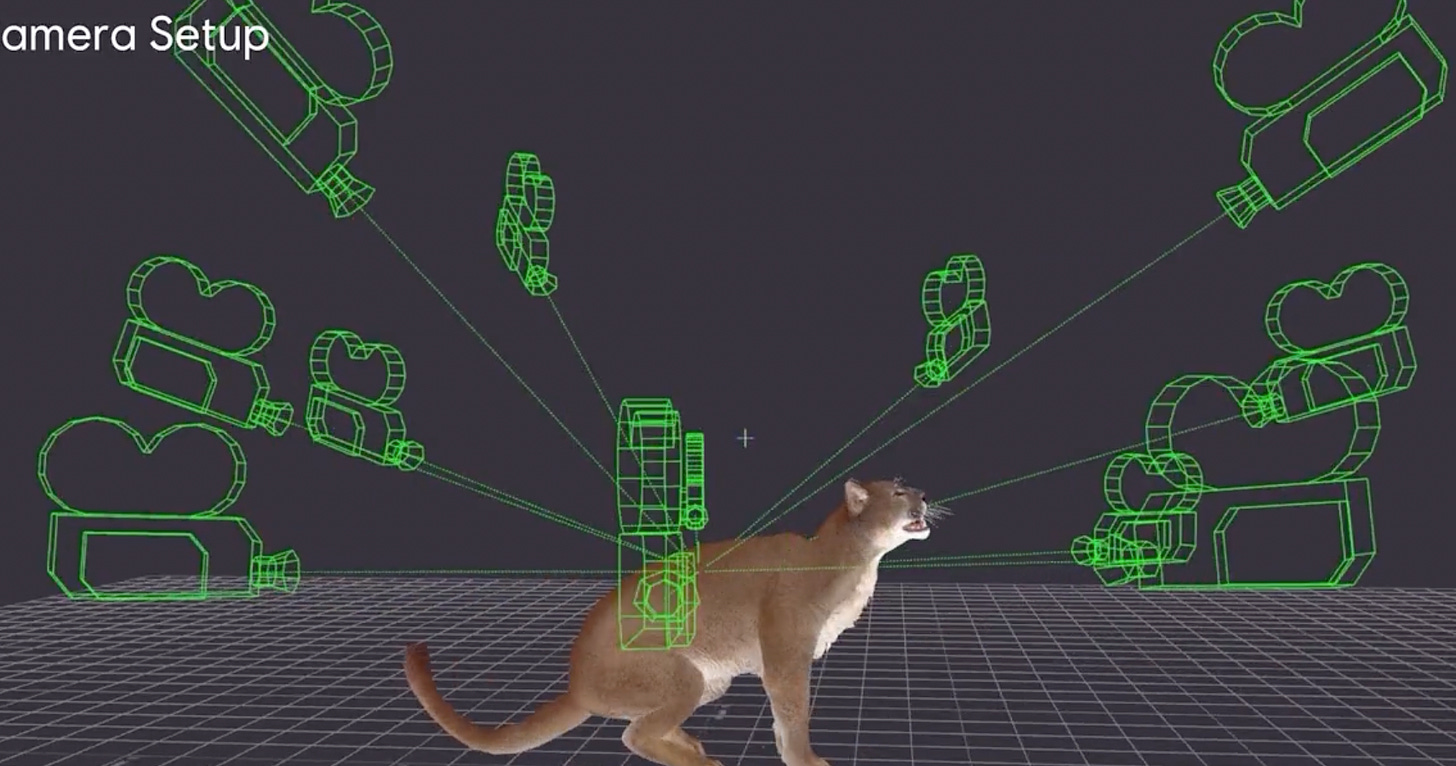

🐅 Animal Animations From Video

Ubisoft showed off ZooBuilder, a pipeline of machine learning tools that converts videos of animals into animations. The system is a prototype and hasn’t been used in any finished games.

In the absence of an expensive dataset that depicts animals in motion, researchers at Ubisoft China and elsewhere generated synthetic training data from the company’s existing keyframe animations of animals. They described the system in an earlier paper.

Yes, but: ZooBuilder initially was limited to cougars and had trouble tracking them when parts of their bodies were occluded or out of the frame, and when more than one creature was in the frame. Whether Ubisoft has overcome these limitations is not clear.

It can take months of person-hours to animate a 3D creature using the typical keyframe approach. Automated systems like this promise to make animators more productive and could liberate them to focus on portraying in motion the fine points of an animal’s personality.

#DeepMind #Poison #Ubisoft

A big thanks to our sources: https://jack-clark.net/, https://www.actuia.com/, https://thevariable.com/news/, https://techcrunch.com/, https://read.deeplearning.ai/the-batch/

What about you? Have you noticed something else?

Don’t miss the next news!

Have a good week-end,

Verónica 🐕, Maxime 🙃 from Toulouse, France with 🌺